Elon Musk: Killer Robots will eliminate us all in 5-10 Years

-

Keith_McClary - Light Sweet Crude

- Posts: 7344

- Joined: Wed 21 Jul 2004, 03:00:00

- Location: Suburban tar sands

Re: Elon Musk: Killer Robots will eliminate us all in 5-10 Y

I gotta stop thinking about AI, I'll get all wrapped in that worse than Ukraine.

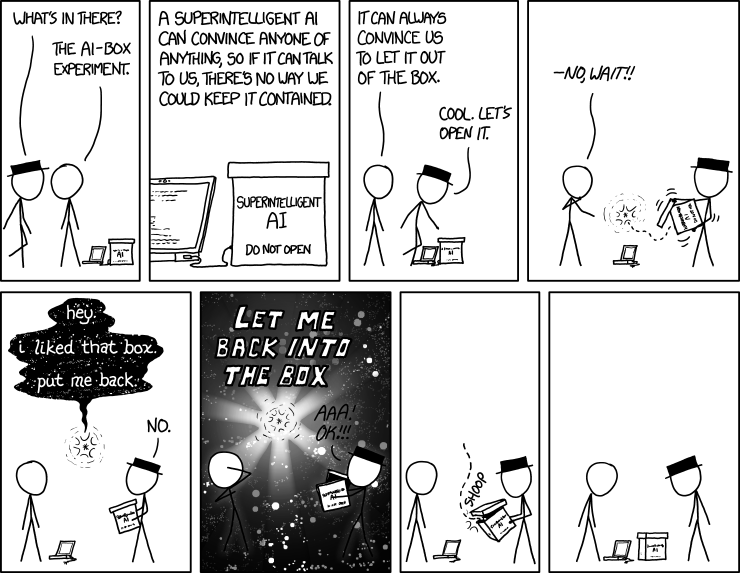

Keith, here's a thought though -- that comic could be true. For all we know, if you do make a singularity AI, then it may just be happy left all alone.

It could sit and daydream and think and do whatever it wants to, like an internal virtual world. If it has free will, then why would it even want to bother talking the humans at the controls?

I can imagine that, scientists trying to get some climate change data crunching done, but the free will AI is having too much fun daydreaming and says "I don't wanna go to work."

-

Sixstrings - Fusion

- Posts: 15160

- Joined: Tue 08 Jul 2008, 03:00:00

Re: Elon Musk: Killer Robots will eliminate us all in 5-10 Y

$this->bbcode_second_pass_quote('Sixstrings', 'Y')es, and a piece of software than learn will be worth tens of billions, who knows how much.

Ha! I wish. Its an OK career but like any technique its all academic unless you can build a better mousetrap. The NEST stuff is an example. Musk thinks the NEST will gain awareness and kill us I guess. I have a NEST but haven't installed it yet. I don't really care about the learning aspect as much as the humidity sensing and incorporation into the control law. Google is integrating NEST with other devices including cameras. Maybe Musk is right and it is going to kill us. The first step is to observe for weakness

-

dinopello - Light Sweet Crude

- Posts: 6088

- Joined: Fri 13 May 2005, 03:00:00

- Location: The Urban Village

Re: Elon Musk: Killer Robots will eliminate us all in 5-10 Y

There appears to be a group studying this at Cambridge.

http://cser.org/emerging-risks-from-technology/

http://cser.org/emerging-risks-from-technology/

-

Newfie - Forum Moderator

- Posts: 18651

- Joined: Thu 15 Nov 2007, 04:00:00

- Location: Between Canada and Carribean

Re: Elon Musk: Killer Robots will eliminate us all in 5-10 Y

.

Elon Musk talks about his investment in AI company Vicarious

It's "to keep an eye on them" so he knows when the killer robots are coming.

Vicarious actually looks like a fun company.

Elon Musk talks about his investment in AI company Vicarious

It's "to keep an eye on them" so he knows when the killer robots are coming.

Vicarious actually looks like a fun company.

-

dinopello - Light Sweet Crude

- Posts: 6088

- Joined: Fri 13 May 2005, 03:00:00

- Location: The Urban Village

Re: Elon Musk: Killer Robots will eliminate us all in 5-10 Y

We are about to make jack s__t when it comes to any future super AI. The world's human inhabitants will soon be too busy out picking said s__t with the chickens to even approach such a scenario. Sorry to dissapoint any techno magical thinkers here.

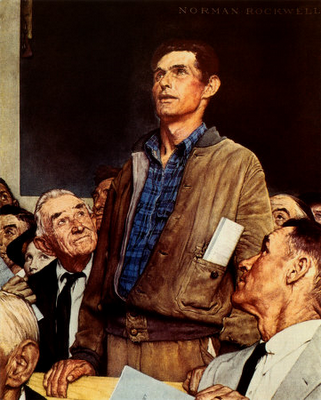

The things that will destroy America are prosperity-at-any-price, peace-at-any-price, safety-first instead of duty-first, the love of soft living, and the get-rich-quick theory of life.

... Theodore Roosevelt

... Theodore Roosevelt

-

Lore - Fission

- Posts: 9021

- Joined: Fri 26 Aug 2005, 03:00:00

- Location: Fear Of A Blank Planet

Re: Elon Musk: Killer Robots will eliminate us all in 5-10 Y

$this->bbcode_second_pass_quote('Lore', 'W')e are about to make jack s__t when it comes to any future super AI.

You may be right, but we already have robots and Elon Musk is very concerned that they will get super smart and decide they have to kill us. Musk says not to trust the robot because they say they are just doing it to pay their way through college.

-

dinopello - Light Sweet Crude

- Posts: 6088

- Joined: Fri 13 May 2005, 03:00:00

- Location: The Urban Village

Re: Elon Musk: Killer Robots will eliminate us all in 5-10 Y

$this->bbcode_second_pass_quote('pstarr', '')$this->bbcode_second_pass_quote('dinopello', 'T')he data volumes we have today is incredible, but the interpretive processing is not even infantile. Swimming in collection systems, drowning in data.

We look up (and down) index-trees like we always did. Now we go sideways with acronym lists. No big deal. Not even infantile, just comp.sci.201.

...

Looks like it's time to take comp.sci 301

This is what we had 5 years ago http://www.darpa.mil/NewsEvents/Budget.aspx

$this->bbcode_second_pass_quote('', '(')C//REL) The Sentient Enterprise tracks and manages thousands of exabytes of data every day (1 exabyte is the equivalent of 100,000 times the Library of Congress, which holds 19 million books), enabling iterative assessments in real time, not days or weeks. The enterprise is able to continuously and autonomously process, evaluate, and act on new data without regard to structure or format.

(S//REL) Self-Learning. The institutional knowledge of the Sentient Enterprise increases the user’s ability to recall events and significant facts to build relational awareness. Simultaneously, the human-machine interface enables the user to continuously refine the “algorithms” that translate human judgments into machine language so that the system actively learns.

(U) The Cognitive Computing Systems program element is budgeted in the Applied Research budget activity because it is developing the next revolution in computing and information processing technology that will enable computational systems to have reasoning and learning capabilities and levels of autonomy far beyond those of today's systems. The ability to reason, learn and adapt will raise computing to new levels of capability and powerful new applications. The Cognitive Computing project develops core technologies that enable computing systems to learn, reason and apply knowledge gained through experience, and respond intelligently to things that have not been previously encountered. These technologies will lead to systems demonstrating increased self-reliance, self-adaptive reconfiguration, intelligent negotiation, cooperative behavior and survivability with reduced human intervention.

(U) The Integrated Learning program is creating a new computer learning paradigm in which systems learn complex workflows from warfighters while the warfighters perform their regular duties. The effort is focused on military planning tasks such as air operations center planning and military medical logistics. With this learning technology, it will be possible to create many different types of military decision support systems that learn by watching experts rather than relying on expensive and error prone hand-encoded knowledge. The new learning paradigm differs from conventional machine learning in that it does not rely on large amounts of carefully crafted training data. Rather, in the new paradigm the learner works to “figure things out” by combining many different types of learning, reasoning, and knowledge. Such a cognitive system will ultimately need the capability to build and update its own internal model of the world and the objects in it without human input.

FY 2009 Accomplishments:

- Modified the integrated learning systems so they can incorporate new software components dynamically and utilize the new capabilities while learning.

- Created control algorithms for the systems that manage credit-and-blame assignment on a component-by-component basis so that if conflicts arise the system can reason about which piece of conflicting information is more likely to be accurate.

- Created control algorithms that reason about the costs/benefits of resolving a particular conflict and direct system performance accordingly.

- Evaluated systems by having them compete against expert humans.

FY 2010 Plans:

- Expand the scope of the problems being learned so the systems learn multi-user task models.

- Modify the integrated learning systems to be able to abstract the details of the process it is learning and learn general process or meta process knowledge.

- Extend capabilities of the integrated learning systems so they can share information (low-level data, mid-level hypothesis, and high-level conclusions) with other learners.

- Evaluate systems by having them compete against expert humans

(U) The Bootstrapped Learning program will provide computers with the capability to learn complex concepts the same way people do: from a customized curriculum designed to teach a hierarchy of concepts at increasing levels of complexity. Learning each new level depends on having successfully mastered the previous level's learning. In addition, the learning program will be “reprogrammable” in the field using the same modes of natural instruction used to train people without the need for software developers to modify the software code. At each level, a rich set of knowledge sources (such as training manuals, examples, expert behaviors, simulators, and references and specifications that are typically used by people learning to perform complex tasks) will be combined and used to generate concepts and a similar set of knowledge sources for the next level. This will enable rapid learning of complex high-level concepts, a capability which is essential for autonomous military systems that will need to understand not only what to do but, why they are doing it, and when what they are doing may no longer be appropriate.

FY 2009 Accomplishments:

- Developed a single system capable of being instructed to perform in three diverse domains.

- Demonstrated the ability of a system to repeatedly acquire new knowledge that drives future learning and cumulatively adds to the system’s knowledge.

- Validated through simulation that diagnosis, configuration and control of critical, autonomous military hardware can be addressed with bootstrapped learning technology.

FY 2010 Plans:

- Establish incontrovertible system generality by demonstrating learning performance in a “surprise” domain that is completely unknown to the learning system developers.

- Enhance system capabilities to include instructible situational awareness.

(U) The Machine Reading and Reasoning Technology program will develop enabling technologies to acquire, integrate, and use high performance reasoning strategies in knowledge-rich domains. Such technologies will provide DoD decision makers with rapid, relevant knowledge from a broad spectrum of sources that may be dynamic and/or inconsistent. To address the significant challenges of context, temporal information, complex belief structures, and uncertainty, new capabilities are needed to extract key information and metadata, and to exploit these via context-capable search and inference (both deductive and inductive). Machine reading addresses the prohibitive cost of handcrafting information by replacing the expert, and associated knowledge engineer, with un-supervised or self-supervised learning systems that “read” natural text and insert it into AI knowledge bases especially encoded to support subsequent machine reasoning. Machine reading requires the integration of multiple technologies: natural language processing must be used to transform the text into candidate internal representations, and knowledge representation and reasoning techniques must be used to test this new information to determine how it is to be integrated into the system’s evolving models so that it can be used for effective problem solving. These concepts and technology development efforts will continue in PE 0602305E, Project MCN-01 beginning in FY 2011.

FY 2009 Accomplishments:

- Initiated research into techniques for reasoning with ambiguous and conflicting information found in texts.

- Extended knowledge representation to support machine reading of large (e.g. open source web) amounts of material with the goal of encoding and querying at broad but shallow semantic levels.

- Produced domain representations that enable semi-supervised approaches to knowledge acquisition.

FY 2010 Plans:

- Demonstrate the ability of a system to acquire and organize factual information directly from unstructured narrative text in multiple domains.

Description: Biomimetic Computing's goal was to develop the critical technologies necessary for the realization of a cognitive artifact comprised of biologically derived simulations of the brain embodied in a mechanical (robotic) system, which is further embedded in a physical environment. These devices represent a new generation of autonomous flexible machines that are capable of pattern recognition and adaptive behavior and that demonstrate a level of learning and cognition. Key enabling technologies include simulation of brain-inspired neural systems and special purpose digital processing systems designed for this purpose.

FY 2011 Accomplishments:

- Demonstrated an autonomous robot with a simulated neural system capable of grasping a three-dimensional object as it enters the visual field and performing a mental rotation task on visual patterns by utilizing working memory

Description: The Mind's Eye program is developing a machine-based capability to learn generative representations of action between objects in a scene, directly from visual inputs, and then to reason over those learned representations. Mind's Eye will create the perceptual and cognitive underpinnings for reasoning about the action in scenes, enabling the generation of a narrative description of the action taking place in the visual field. The technologies developed under Mind's Eye have applicability in automated ground-based surveillance systems.

Foundational Learning Technology

(U) The Foundational Learning Technology program develops advanced machine learning techniques

that enable cognitive systems to continuously learn, adapt and respond to new situations by drawing

inferences from past experience and existing information stores. The techniques developed under

Foundational Learning Technology address diverse machine learning challenges in processing of

sensory inputs, language acquisition, combinatorial algorithms, strategic analysis, planning, reasoning,

and reflection. One very promising approach involves transfer learning techniques that transfer

knowledge and skills learned for specific situations to novel, unanticipated situations and thereby

enable learning systems to perform appropriately and effectively the first time a novel situation is

encountered.

Robust Robotics

(U) The Robust Robotics program is developing advanced robotic technologies that will enable

autonomous (unmanned) mobile platforms to perceive, understand, and model their environment;

navigate through complex, irregular, and hazardous terrain; manipulate objects without human control

or intervention; make intelligent decisions corresponding to previously programmed goals; and interact

cooperatively with other autonomous and manned vehicles.

The Visual Media Reasoning (VMR) program will create technologies to automate the analysis of enemy-recorded photos and videos and identify, within minutes, key information related to the content. Such identification will include the names of individuals within the image (who), the enumeration of the objects within the image and their attributes (what), and the image's geospatial location and time frame (where and when).

plus many, many, more

Everytime we use Google we are refining it's algorithms.

http://www.research.ibm.com/cognitive-c ... nZeHgjpLSY

$this->bbcode_second_pass_quote('', 'I')BM’s brain-inspired architecture consists of a network of neurosynaptic cores. Cores are distributed and operate in parallel. Cores operate—without a clock—in an event-driven fashion. Cores integrate memory, computation, and communication. Individual cores can fail and yet, like the brain, the architecture can still function. Cores on the same chip communicate with one another via an on-chip event-driven network. Chips communicate via an inter-chip interface leading to seamless scalability like the cortex, enabling creation of scalable neuromorphic systems.

Advancing the SyNAPSE Ecosystem

The new chip is a component of a complete end-to-end vertically integrated ecosystem spanning a chip simulator, neuroscience data, supercomputing, neuron specification, programming paradigm, algorithms and applications, and prototype design models. The ecosystem supports all aspects of the programming cycle from design through development, debugging, and deployment.